I configured my Neovim LSP error symbol to a skull and cross bones, so now whenever I write bad code I feel like a pirate. 🏴☠️

I configured my Neovim LSP error symbol to a skull and cross bones, so now whenever I write bad code I feel like a pirate. 🏴☠️

TIL guthub.com redirects to github.com. I’m sad it isn’t a recipe site.

Just got my zines from Julia Evans. Very excited to dig in.

I gave a short presentation on CSS Subgrid last night at the Astoria Tech Meetup. Lots of good conversations afterwards. Thanks to everyone who came out!

TIL MLP. Instead of MVP, the Minimum Viable Product, it’s the Minimum Lovable Product. I like that.

Getting my ass whipped at Magic: The Gathering at friendly local draft. The game has changed a lot in twenty years (who’d have thought?). Lots of fun still.

Today is my second anniversary working for Lullabot. Joining the team is still one of the best decisions I’ve made. 😴🤖

TIL about the CSS cap unit, which is equal to the current font’s capital letter height.

It took me a while to figure out why LSP was reporting describe was not a valid method of string.

const id = label.toLowerCase().replaceAll(' ', '-').

describe('DisabledField', () => {

One liner to open all git modified files in your editor. Substitute nvim with your-editor; statistically code.

git status -s | awk '{print $2}' | xargs nvim

I ordered 3 of Julia Evans zines.

I have the PDFs, but waiting to read the physicals. Very excited.

TIL about margin-trim from Apple’s What’s new in CSS video. Excited for when it’s broadly supported in browsers. Right now, it’s only in Safari.

Folks really don’t like git rebase. FUD or real? Both? Context: I love it.

So that was what happened to the jetpack dream.

Periodic reminder that I love Map().

Why is it cold again? I just put away my long underwear. And by that I mean underwear I’ve had for a long time. Which I still have out. Never mind.

I biked from Bed Stuy to Astoria yesterday. It took about an hour, over mostly protected bike lanes. I’m still sore. 🚴🏻♂️

I was randomly thinking about 3 Doors Down today, specifically this line from Kryptonite.

If I go crazy will you still call me Superman.

That means the singer’s SO calls them Superman as a pet name.

There’s no way that isn’t sarcastic.

“Hey babe, do you have any Tylenol?”

“Sure thing, Superman.”

God help me, my daily text editor/IDE is Neovim, but I started using Emacs as an orgmode only app. I was using a limited orgmode in Neovim before, so who knows which is more of a lift.

TIL that the Friday the 13th Part 3 theme is a bop.

TIL that the tabindex attribute must not be specified on a dialog element. The more you know. 🌈

I was really into Disney’s Sword in the Stone as a kid. I didn’t relate to the child Arthur, I wanted to be Merlin. This says a lot.

My wonderful colleague Kat Shaw is featured in The Drop Time’s article on celebrating women in Drupal. The profile is accurate! I feel lucky to work with her.

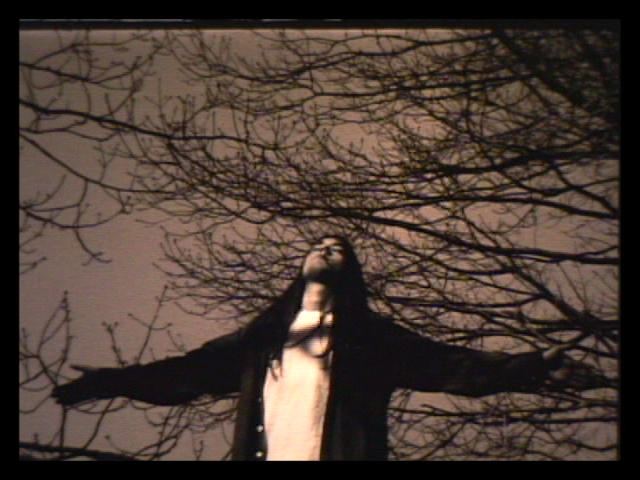

I found my friend’s 16mm student film I was in when I was around 17. Remember being a teenager and trying to be deep? This is that but filmed. Radiohead soundtrack. Smoking. Slow motion. Sepia. Falling. A gun. It’s all here.

New Brother’s Grimm character: Rumpled Stiltskin. If you guess his name, he looses the bet and not only do you get to keep your baby, he has to iron it’s clothes.